A few months ago, one of my LinkedIn connections introduced me to the cloud resume challenge. The challenge instructs participants to build a "serverless" resume website using HTML/CSS and AWS cloud infrastructure, deploy the infrastructure using IaC (infrastructure as Code) and to setup a CI/CD pipeline for continuous delivery.

At the time I was studying for the AWS Cloud Practitioner certification, and planned on earning the AWS Solutions Architect Associate certification shortly after. After 65+ hours of studying, and completing several labs I went in and passed both exams landing myself with 2 new AWS certifications.

Although the cloud resume challenge concluded on July 31 2020, after a full #100DaysOfCloud I decided to dive in and complete the challenge anyways.

The Requirements

- Get AWS Certified

- Build a website with HTML

- Style the site with CSS

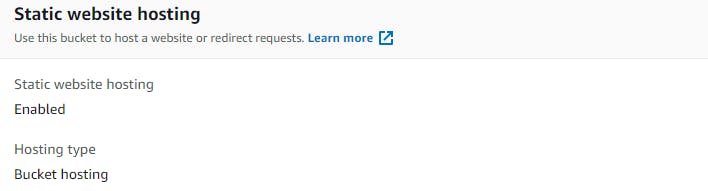

- Host the static site with Amazon S3

- Use HTTPs for security, using an AWS CloudFront distribution

- Use a custom domain name and setup DNS using Route 53

- Write some JavaScript to display a visitor count on the site

- Setup a DynamoDB table in AWS to store the visitor count

- Build an API and an AWS Lambda function that will communicate between the website and the database

- Write the Lambda function in Python

- Write and implement Python test in the code

- Deploy the backend AWS infrastructure (API, Database and Lambda function) using infrastructure as a code, not by clicking around in the AWS console

- Use source control for the site via GitHub

- Implement automated CI/CD for the front end

- Implement automated CI/CD for the back end

- Write a blog post about the experience

Front-End

The challenge starts with building an HTML/CSS version of your resume. Having built an HTML/CSS resume website in my high school days and because HTML/CSS isn't the main focus of the project, I decided to use a template and modify it as needed.

Once I built the website I needed to host it somewhere so I used Amazon Simple Storage Service (S3) and its static hosting capabilities.

Next step was to setup HTTPS. I setup a CloudFront distribution for the site, pointed a custom URL purchased through Route 53 at that distribution, and used Amazon Certificate Manager to obtain an SSL certificate.

Back-End

With the front-end out of the way, I moved on to setting up the back-end for the site.

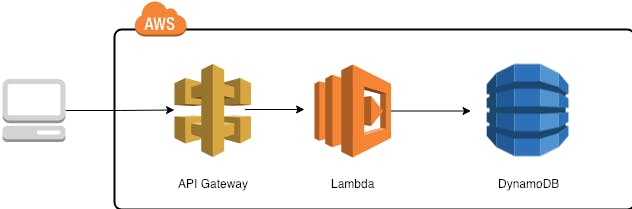

The back-end consists of:

- The Dynamo DB table which contains the visitor count

- The API gateway used for communicating between the site and the database

- The lambda function which is run when the API is called. This lambda function updates the visitor count stored in DynamoDB

The challenge requires you to deploy the back-end resources using SAM (Serverless Application Model). I first spent some time looking over AWS's SAM template syntax, and then setup a SAM template which defined all the resources I wanted to build (Database, API, Lambda Function). I then deployed the stack using the SAM CLI which created these resources in my AWS account.

JavaScript

At this point the site and the infrastructure were in place, however, I didn't have anything in my website which called the API and displayed the visitor count.

I wrote some JavaScript in my front end-website code which runs when the site is accessed. The JavaScript calls the API, the API then triggers lambda which runs a function that updates the visitor count in Dynamo DB, at which point the API returns the new visitor count and is displayed in the website.

CI/CD

The last stage in the project was to setup a "Continuous integration/Continuous delivery" pipeline using GitHub actions.

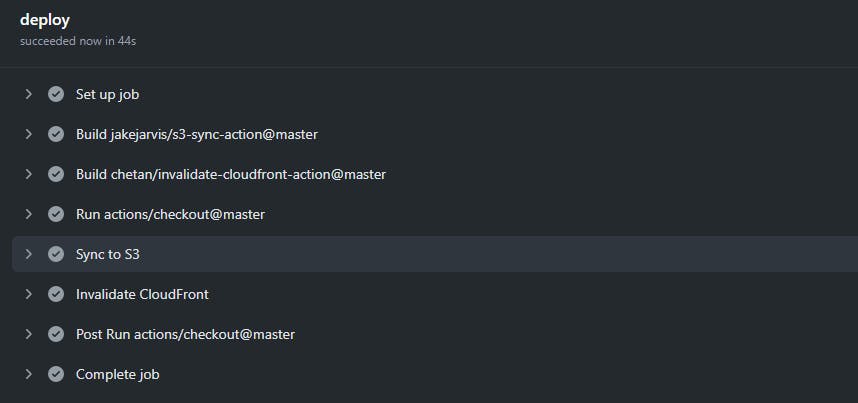

I first setup a private GitHub repository to store my Front-End code. Then, I setup GitHub actions to sync any changes in the repository to the Amazon S3 bucket and to invalidate the CloudFront cache.

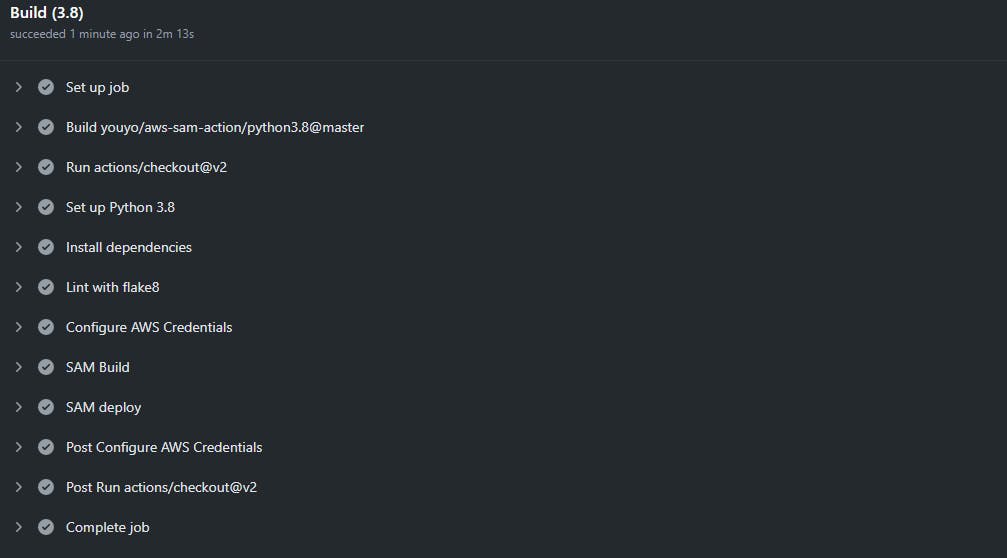

The CI/CD for the back-end code was a little more difficult. I setup a private GitHub repository for my Back-End code. The repository contains the SAM template and python files used in the project.

Per the requirements, the GitHub workflow should first run python tests, and the stack should be deployed to AWS if the tests pass.

I opted to not include any tests in my project as I am mainly focused on the process of interacting with the AWS resources and less of the code development portion of it.

I setup the git-hub workflow for the back-end to lint the python code, then build and deploy the stack to AWS.

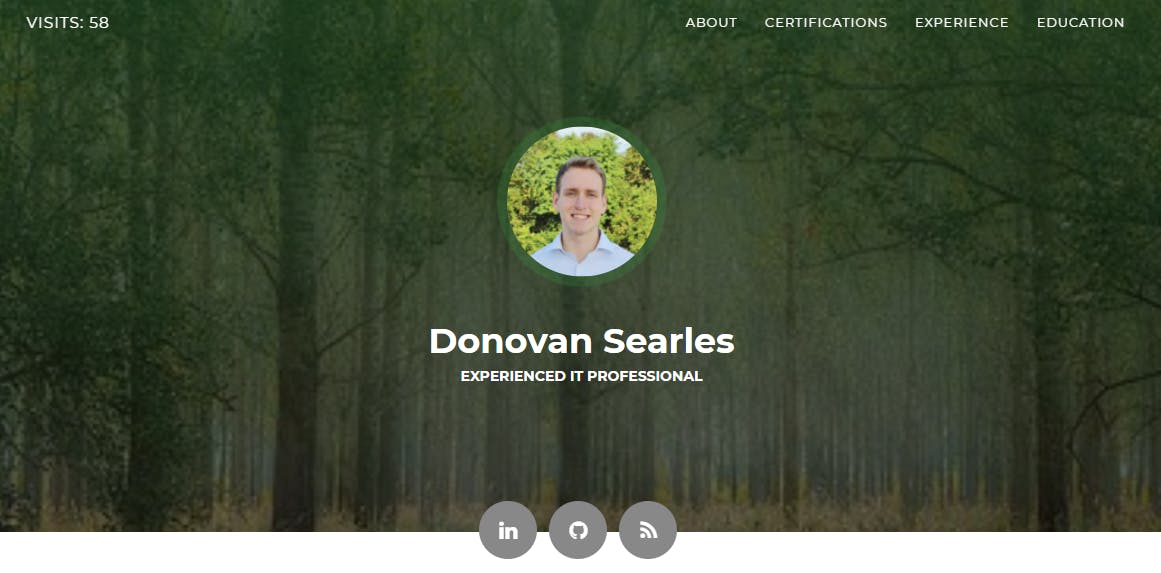

The Final Product

This experience has been very rewarding. I was able to put to use some of the knowledge I learned from the AWS SA Associate and AWS Cloud Practitioner certifications. I was also able to dabble a bit in Python and get some hands on with SAM.

Here is the final product: dsearles.com

What's next for me? I plan on knocking out a few more AWS certifications and potentially putting a few of those lab/projects on this site.

Special thanks to "Forrest Brazeal" for creating this project!

Update

Changes to the original site have since been made. Additional changes will be made moving forward.

See the updated site here: dsearles.com

Here is the original site: